AI is no longer just a tool - it’s now a core element of how software is created. The AI Software Development Lifecycle (AI-SDLC) integrates AI into every stage of software development, transforming workflows, team roles, and decision-making processes. This approach ensures faster development, better quality, and smarter solutions.

Key insights include:

- AI as a collaborator: AI handles repetitive tasks, assists with coding, testing, and deployment, and supports decision-making across the lifecycle.

- New team roles: Specialized roles like ML engineers, MLSecOps, and data scientists are essential for managing AI systems, data pipelines, and security.

- Governance and ethics: AI projects require robust governance, including bias checks, explainability, and compliance with privacy laws like GDPR and CCPA.

- Impact on productivity: Companies like Google and Amazon report that AI now contributes to over 20% of new code, saving thousands of developer hours annually.

- Continuous improvement: AI systems need constant monitoring for performance drift, retraining, and updates to maintain reliability.

Why it matters: AI is reshaping software development, enabling teams to deliver faster, reduce costs, and stay competitive. This playbook outlines how to integrate AI effectively while addressing risks and ensuring responsible practices.

Foundations of an AI-Native SDLC

How AI Changes the SDLC

AI doesn’t just make development faster - it reshapes the entire approach teams take toward the software development lifecycle (SDLC). Traditional SDLC models are built around static workflows. In contrast, AI-driven systems rely on learning from data, which makes elements like data quality, model performance, and continuous iteration absolutely critical.

This shift introduces the need for robust data pipelines, frequent model updates, and checkpoints that ensure responsible AI practices. MLOps has become essential, handling tasks like monitoring performance drift, managing data pipelines, and integrating ethical safeguards such as bias checks and robustness testing. As development moves from conventional software to machine learning and generative AI, uncertainty grows. This evolution calls for more flexible and responsive development strategies.

AI also changes how teams work together. Instead of the traditional step-by-step handoff process, AI development thrives on dynamic, cross-functional collaboration. Data scientists, engineers, and business analysts continuously refine decisions about technical and architectural aspects. This approach, often referred to as collaborative elaboration and construction, ensures that every team member plays a role in shaping the project.

These changes in technology and teamwork bring new demands for clearly defined roles and structured governance, which we’ll explore next.

Key Roles in an AI-Native SDLC

As AI transforms the development process, team roles must adapt to meet both technical and ethical challenges. Building AI-powered products requires specialized skills and redefined responsibilities across the team.

- Product Managers and Business Analysts: They define the problem, assess feasibility, and align AI solutions with business objectives, all while addressing ethical concerns .

- Data Scientists: Their responsibilities include collecting, cleaning, and preparing data. They handle tasks like exploratory analysis, data transformation, and labeling, as well as selecting, training, and evaluating models. This often involves techniques like hyperparameter tuning and bias analysis.

- ML Engineers: Focused on model architecture, optimization, and deployment, these engineers use tools like Docker and Kubernetes for scalability. They also incorporate security measures to defend against adversarial attacks .

- Software Engineers: Working within multidisciplinary teams, they write and review code (often with AI assistance) and integrate models into existing systems .

- DevOps Teams: They ensure systems remain scalable and maintainable through CI/CD pipelines, logging, and error tracking.

- QA Teams: Their tasks include A/B testing, real-world simulations, and human-in-the-loop validation.

- Security Professionals (MLSecOps): They protect AI systems by implementing security measures, conducting adversarial testing, and monitoring for emerging threats and compliance issues.

- Operations Teams: Responsible for knowledge transfer, they monitor deployed models, address performance issues, and manage updates or retraining as required.

As Atiba highlights, "AI development thrives when data scientists, software engineers, and business analysts collaborate".

Governance Requirements for AI Projects

With these technical changes and evolving roles, governance becomes essential to ensure AI systems remain ethical and compliant. Governance starts at the problem definition stage, where teams establish ethical guidelines, regulatory compliance measures, and data policies covering ownership, access, and lineage. This involves creating accountability mechanisms and ensuring transparency and explainability throughout the development process.

In industries with strict regulations, documenting data sources and maintaining explainability are especially important. Since AI systems often process large volumes of data, compliance with privacy laws like GDPR and CCPA requires organizations to safeguard sensitive information, ensure transparency in data usage, and give users control over their data.

Ongoing model governance is equally important. Teams should implement dashboards, alerts, and retraining routines to monitor models continuously and maintain reliability over time. Regular audits can help identify compliance issues, uncover biases across demographic groups, and test robustness using adversarial examples and edge cases.

"Secure model development minimizes vulnerabilities and risks, while thorough training processes help models learn from accurate, unbiased data, reducing the likelihood of unintended or harmful outputs." - Palo Alto Networks.

AWS re:Invent 2025 - Introducing AI driven development lifecycle (AI-DLC) (DVT214)

AI Integration Across SDLC Phases

AI Integration Across Software Development Lifecycle Phases

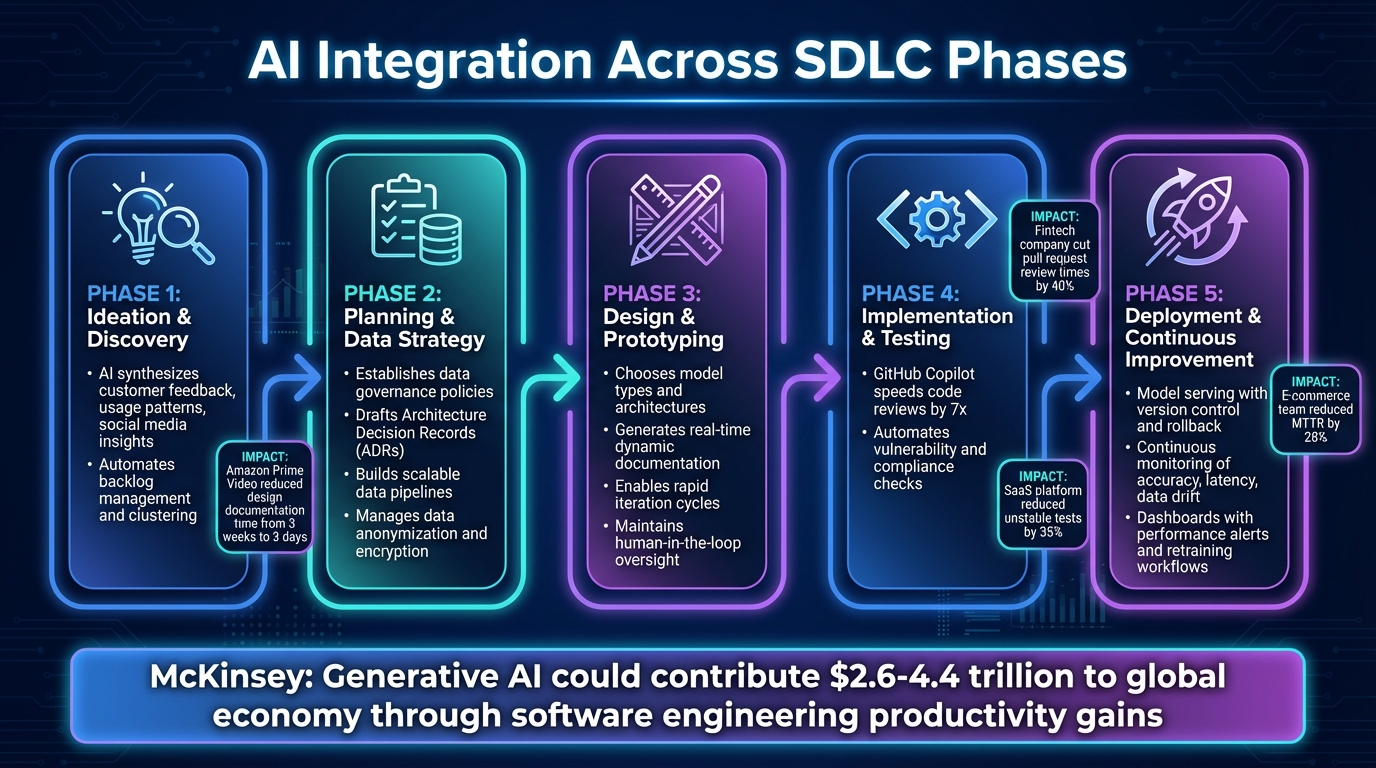

With governance and defined roles in place, AI is now reshaping every phase of the Software Development Life Cycle (SDLC). By embedding multi-step workflows, AI is driving efficiency and transformation across the board. According to McKinsey, generative AI could contribute between $2.6 trillion and $4.4 trillion to the global economy, with much of this impact tied to gains in software engineering productivity.

Ideation and Discovery

AI is revolutionizing how teams identify opportunities and validate ideas. By synthesizing customer feedback, usage patterns, social media insights, and competitive research, AI can generate ready-to-use briefs. It also simplifies backlog management by clustering similar requests, eliminating duplicate user stories, and even proposing acceptance criteria automatically.

Defining clear problem boundaries early is critical. Teams should perform stakeholder analyses, gather both functional and non-functional requirements, and set measurable KPIs while assessing ethical impacts. AI tools speed up prototyping and enable automated A/B testing, allowing teams to validate ideas faster while conserving resources. For instance, at Amazon Prime Video, a process that once required three weeks for design documentation and stakeholder alignment was reduced to just three days using AI tools - freeing up time for more strategic tasks.

Planning and Data Strategy

Effective planning for AI projects hinges on robust data governance and resource allocation. This aligns with earlier governance principles, ensuring data decisions meet ethical and compliance standards. Teams must establish policies around data ownership, access controls, retention schedules, and documentation of data lineage. Sensitive data should be anonymized and encrypted, with user consent carefully managed.

AI tools can assist by drafting Architecture Decision Records (ADRs), outlining options with pros and cons, and flagging risks or performance concerns early through threat modeling and non-functional requirements analysis. Teams should also build scalable data pipelines for cleaning, integrating, transforming, augmenting, and labeling data.

Design and Prototyping

AI-driven design focuses on reducing uncertainty while maintaining human oversight. Teams must choose appropriate model types and design architectures that prioritize interpretability and integrate security features from the start. Techniques like hyperparameter optimization, transfer learning, and ensemble methods can enhance model performance.

Collaboration platforms that generate real-time, dynamic documentation are becoming essential. These tools support "human-in-the-loop" design principles, emphasizing the importance of human expertise in spatial reasoning and systems thinking. Rapid iteration cycles allow for quick testing and adjustments, paving the way for a faster and more reliable implementation phase.

Implementation and Testing

The implementation phase benefits greatly from AI tools that streamline coding and review processes. For example, GitHub Copilot can speed up code reviews by as much as seven times. Other examples include a fintech company that cut pull request review times by 40% using a repo-aware review agent, and a SaaS platform that reduced unstable tests by 35% through automated grouping and suggested fixes.

AI tools enable repo-aware code generation and automated reviews, while rigorous evaluations like k-fold cross-validation, bias assessments, and adversarial testing ensure model reliability. Integrating AI into DevSecOps pipelines further enhances security and compliance by automating checks for vulnerabilities, accessibility, and policy adherence .

Deployment and Continuous Improvement

Deploying AI models requires strategies tailored to their unique needs, such as model serving, version control, and rollback procedures. Teams must select the right deployment environments - whether cloud, on-premises, or edge - and ensure efficient serving through RESTful APIs or gRPC. Consistent deployments can be achieved using tools like Docker, while load balancing and scaling strategies maintain operational stability.

For example, an e-commerce team reduced its mean time to resolution (MTTR) by 28% using a chat-driven incident copilot. Continuous monitoring is crucial, with metrics like prediction accuracy, latency, and data drift tracked over time. Dashboards with performance alerts and retraining workflows help teams maintain reliability. Treating the development experience as a product itself allows for ongoing refinement based on feedback. This commitment to continuous improvement underscores the promise of AI in the SDLC: agile, data-driven progress.

sbb-itb-7c23fa1

Responsible AI and Risk Management

As AI transforms the Software Development Life Cycle (SDLC), weaving ethical guidelines and strong risk management into the process is no longer optional - it’s essential. These practices complement existing governance frameworks and help ensure that AI-driven systems deliver value without compromising safety or integrity. Without a clear ethical foundation, AI systems can introduce risks that undermine trust and functionality. As AI takes on a greater role in development, governance, safety, and compliance have become non-negotiable requirements.

Principles of Responsible AI

At its core, responsible AI is built on key principles: ethics, fairness, responsibility, security, explainability, transparency, traceability, provenance, data privacy, and human oversight . These principles act as guardrails, ensuring AI systems operate as intended. Since AI models learn from data, any biases or flaws in that data can be amplified by the system. This makes ethical data management a priority from the start.

One of the biggest hurdles remains the "black box" problem - when AI models can’t explain their decision-making processes. This is where explainable AI becomes critical. Models and tools that provide transparency allow product leaders to understand why an AI made a particular recommendation or generated specific outputs. Such transparency is vital for trust and usability.

Human oversight is another cornerstone of responsible AI. While AI can handle repetitive tasks efficiently, it lacks the contextual understanding and judgment required for critical decisions. A hybrid approach works best: let AI manage routine tasks like boilerplate code, while human developers focus on architecture, business logic, and nuanced decision-making.

By adhering to these principles, organizations can address the unique risks AI introduces and implement effective mitigation strategies.

Risk Categories and Mitigation Strategies

AI projects come with distinct risks that require targeted strategies to manage them effectively:

- Ethical and Bias Risks: Careful selection of training data is crucial. To address bias and hallucination issues, include dedicated tests in the continuous integration (CI) pipeline and validate outputs against benchmark datasets. Establishing a cross-functional "Responsible AI team" with members from engineering, legal, product, and security can help oversee high-risk applications and approvals.

- Security Risks: Incorporate Static Application Security Testing (SAST) and Dynamic Application Security Testing (DAST) into CI/CD pipelines. For sensitive data, prioritize on-premise or private inference solutions rather than relying on public AI APIs .

- Quality and Reliability Risks: Over-reliance on AI outputs can lead to errors. Require human review for all AI-generated changes and ensure traceable metadata is recorded. Avoid pushing AI-generated changes directly into production to prevent accumulating technical debt and introducing bugs.

- Compliance and Governance Risks: Develop a comprehensive model-use policy that defines approved models, data handling rules, and logging requirements. Every AI-influenced code change should include metadata such as model version, prompt, data snapshot, and author. This level of traceability is invaluable for audits and incident investigations.

US Regulatory Compliance for AI

The regulatory landscape for AI in the United States is evolving rapidly. In December 2025, the White House issued an Executive Order aimed at creating a unified national AI policy. This framework seeks to eliminate conflicts between state-level regulations and reduce the regulatory burden on AI companies . Federal agencies are tasked with evaluating and potentially restricting funding for state laws that contradict national AI policies.

Product leaders must stay informed about these developments, as they will shape compliance requirements across industries. The creation of an AI Litigation Task Force by the US Attorney General signals heightened scrutiny of AI systems. To navigate this environment, organizations should maintain detailed documentation, including audit trails and provenance records. Implementing Software Bill of Materials (SBOMs) for AI models - tracking their lineage, training data, and dependencies - can strengthen compliance efforts and enable swift responses to regulatory inquiries.

Developing an Acceptable Use Policy in collaboration with IT, HR, Security, and Legal teams is another critical step. A tiered approach to tools can help manage risks effectively: designate "Tier 1" tools for vetted applications safe for confidential data, and "Tier 2" tools for unvetted public applications limited to non-sensitive tasks. This clear categorization empowers teams to make informed decisions without unnecessary escalation, laying the groundwork for a responsible, AI-driven organization.

Building an AI-Driven Product Organization

To truly integrate AI into your organization, it’s essential to embed it into existing teams, develop critical skills, and set clear metrics for success. The best results come from weaving AI into cross-functional teams rather than isolating it in separate departments that can become disconnected from the core workflow.

Organizational Structures for AI

Gone are the days of keeping AI teams separate from product development. Integrating AI directly into DevSecOps teams makes it an active collaborator. This approach encourages seamless teamwork between human members and AI tools, allowing AI to handle repetitive tasks while developers concentrate on design and strategy.

Take Cloudwalk, for example. They use OpenAI's Codex to translate specifications into fully functional code in just minutes. Engineers, product managers, designers, and operators rely on Codex daily for scripting, setting fraud rules, and building microservices. By automating routine tasks, they can quickly turn ideas into reality. Similarly, Virgin Atlantic leverages OpenAI's Codex VS Code Extension for tasks like log tracing, issue investigation, and change reviews. This speeds up root cause analysis and reduces manual triage, freeing teams to focus on system reliability and validating fixes.

To make the most of AI, consider adopting a "Delegate/Review/Own" model. Let AI handle feasibility checks, code generation, and test creation, while human experts review and refine the output. Leaders should collaborate with their teams to identify bottlenecks and explore how AI can simplify complex tasks. Start with well-defined projects and gradually expand AI’s responsibilities. Communicating that AI is a tool to enhance - not replace - developers’ work is critical. This encourages teams to embrace AI as a way to focus on high-value, business-critical activities.

These changes lay the groundwork for developing the specialized skills AI product leaders need.

Skills for AI Product Leaders

AI is reshaping the role of product leaders, shifting the focus from launching features to delivering measurable business outcomes. With AI augmenting data analysis and decision-making, product leaders now need a broader set of skills.

Key abilities include interpreting data, prompt engineering, detecting bias, and planning system integrations. Beyond the technical know-how, leaders must connect technical efforts to business goals, ensure compliance through governance, and balance trade-offs like model accuracy versus latency or customer experience.

Start small with low-risk AI experiments - like using AI to summarize meetings or extract insights from analytics - and then scale up. To get leadership buy-in, clearly articulate the specific problems AI will address and the expected outcomes. It’s also crucial to maintain human oversight to avoid over-reliance on AI and to address issues like data quality or bias. Jing Hu, Senior Global Product Manager at Just Eat, highlights the human element:

"As product managers, our unique blend of strategic thinking, empathy, adaptability, and real-world understanding still sets us apart in this AI-driven landscape".

The influence of AI is already evident. In Q4 2024, Pinterest revealed that over 20% of the code submitted by its 1,800 engineers was generated by GitHub Copilot. Similarly, Google's CEO reported that more than 25% of new Google code is now AI-generated, marking a significant shift in software development practices.

By cultivating these skills, organizations can track real progress in AI maturity and its impact on business goals.

Measuring AI Maturity and Success

To gauge AI maturity, focus on adoption, engagement, and the resulting business impact. Start by tracking how many team members are actively using AI tools and measure their engagement with these technologies.

As AI adoption grows, shift your attention to business results. Key metrics include cycle time reductions (how quickly features are delivered), model performance indicators (accuracy, latency, error rates), and business outcomes like revenue growth, cost savings, and improved customer satisfaction. When implemented effectively, AI can boost developer productivity by 3–5 times.

Improving AI maturity requires strong executive support, clear policies, dedicated leadership, and ongoing training for employees. A phased approach works best: begin with foundational steps like setting up governance frameworks and acceptable use policies. Then, focus on automating repetitive tasks and gradually move toward creating AI-driven features that add value for customers. Remember, governance, ethics, and security are essential for a mature AI-driven software development lifecycle (SDLC). Poor governance can lead to setbacks, while robust frameworks consistently drive positive results.

As AI becomes a core part of software development rather than just a helpful tool, tracking maturity is vital to remain competitive. Measure progress across three key areas: people (skills and adoption), processes (standardized workflows and governance), and technology (platform integration and security). This holistic approach ensures your organization is truly AI-driven.

Conclusion: Implementing the AI-SDLC

The strategies outlined above serve as the foundation for successfully integrating AI into the software development lifecycle (AI-SDLC). This isn't a one-and-done initiative - it’s an ongoing process that requires regular monitoring, fine-tuning, and adjustments over time. Companies that achieve meaningful results treat this as a cycle of continuous learning and improvement, embedding it into every phase of their development process.

Consider this: organizations that have implemented AI throughout their development lifecycle report a 40% reduction in development time and a 30% decrease in costs. By 2025, companies like Asana and Ramp embraced Graphite's AI-powered review tools, enabling each engineer to save over 7 hours per week and allowing Ramp to ship code three times faster.

To make this work, start by clearly defining your business problem, objectives, and key performance indicators (KPIs). Build a robust data strategy to ensure consistent data acquisition, quality, and governance. Assemble multidisciplinary teams - data scientists, machine learning engineers, and business analysts - to work collaboratively using agile, iterative AI development practices. Human oversight remains critical to validate outputs, provide context, and maintain quality.

Long-term reliability requires careful monitoring. Use dashboards, alerts, and retraining routines to track KPIs and address any system degradation over time. These steps align with the iterative refinements discussed earlier, reinforcing the importance of ongoing maintenance to keep AI systems performing at their best.

The move toward AI-driven development is already reshaping the industry. Product leaders who embrace this shift, invest in upskilling their teams, and adopt the right tools and frameworks will position their organizations to deliver faster, innovate smarter, and stay competitive in an increasingly AI-focused world. Following these steps ensures a smoother transition to an AI-native development approach.

FAQs

How does integrating AI change traditional software development roles?

Integrating AI into software development is changing the game by automating tasks that were once time-consuming, like coding, testing, and deployment. This automation frees up developers to concentrate on strategic, creative, and more impactful work.

AI doesn’t just streamline tasks - it also enhances team collaboration, accelerates workflows, and demands new expertise. Skills like data management, training AI models, and overseeing AI systems are becoming essential. By embracing these shifts, teams can harness the full power of AI-driven development while keeping their work aligned with broader business objectives.

What are the key governance practices for deploying AI ethically?

To ensure ethical AI deployment, it’s crucial to weave fairness, transparency, and accountability into every step of the software development process. This means regularly checking for biases and potential risks, adopting explainability standards, and setting clear guidelines to safeguard data privacy and security.

Keep a close eye on AI systems to detect unintended biases in their results and ensure they comply with regulations like GDPR and CCPA. By prioritizing these practices, organizations can foster trust while aligning their AI efforts with both ethical principles and legal requirements.

What are the best ways to measure the success of AI in software development?

To evaluate how AI is performing in software development, it's important to track metrics that highlight both technical progress and business value. Key indicators to watch include deployment velocity, code quality, and operational efficiency. Beyond these, keeping an eye on change failure rates, test coverage, and a decrease in security incidents can provide deeper insights into AI's influence on development processes.

Pairing these metrics with consistent feedback from cross-functional teams gives companies a well-rounded understanding of how AI is driving business objectives and enhancing development workflows.